The result is: Two author web scrape on same row Other media web scraper examples

Then in the adjancent cell, C1, I add another formula to collect the second author works by using 2 to return the author’s name in the second position of the array returned by the IMPORTXML function. The new formula the second argument is 1, which limits to the first name. To do this, I use an Index formula to limit the request to the first author, so the result exists only on that row. a long list of URLs in column A), then you’ll want to adjust the formula to show both the author names on the same row. This is fine for a single-use case but if your data is structured in rows (i.e. The formula in step 4 above still works and will return both the names in separate cells, one under the other: Two author web scrape using importXML In this case there are two authors in the byline. The xpath-query, looks for span elements with a class name “byline-author”, and then returns the value of that element, which is the name of our author.Ĭopy this formula into the cell B1, next to our final output for the New York Times example is as follows: Basic web scraping example using importXML in Google Sheets Web Scraper example with multi-author articles We’re going to use the IMPORTXML function in Google Sheets, with a second argument (called “xpath-query”) that accesses the specific HTML element above.

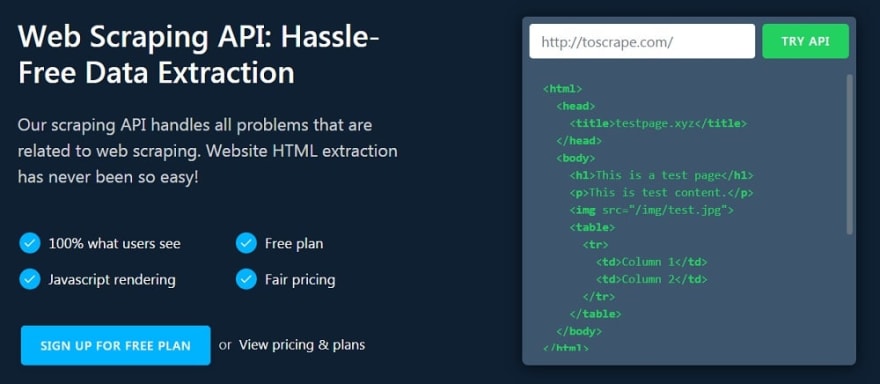

#Easy webscraper code#

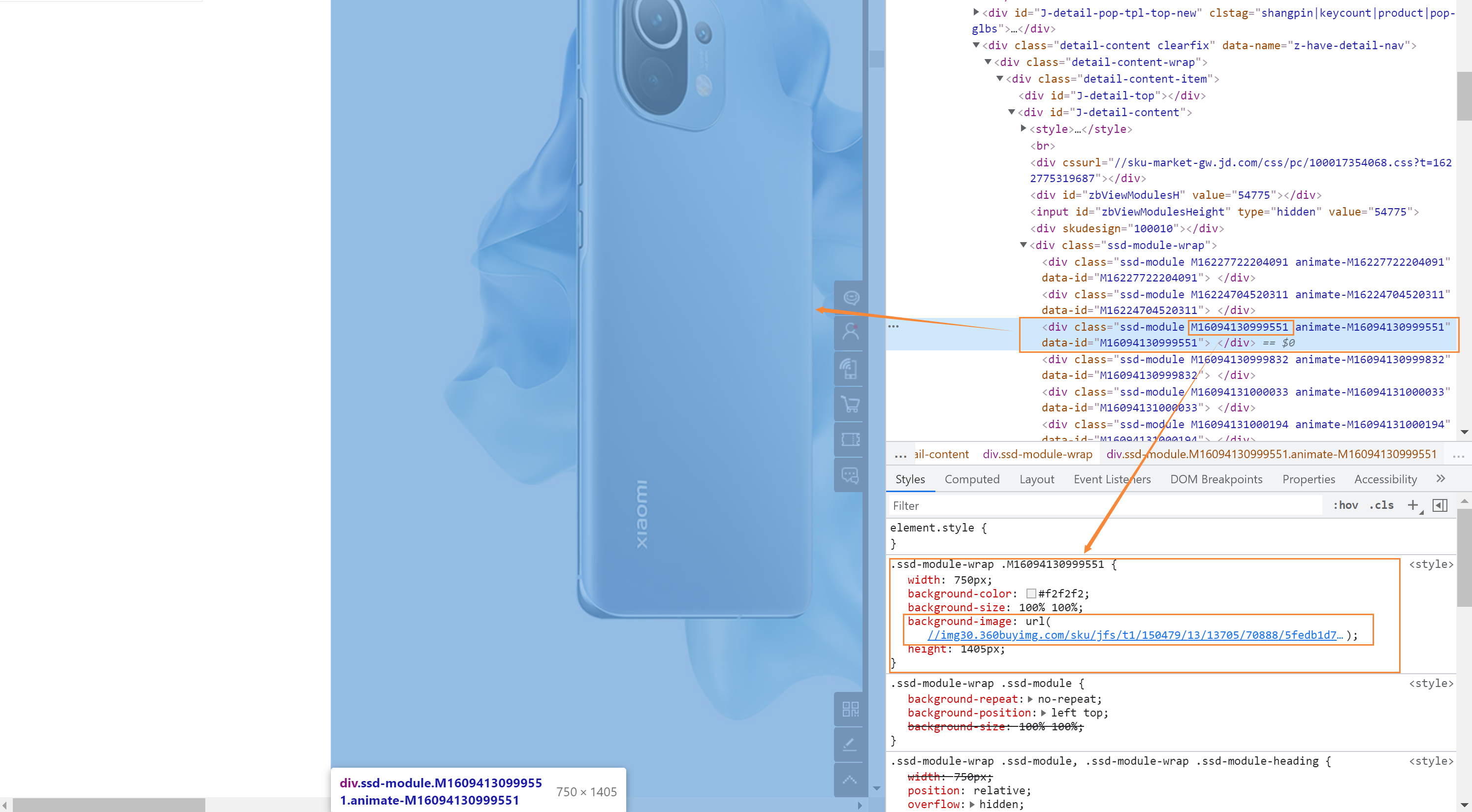

In the new developer console window, there is one line of HTML code that we’re interested in, and it’s the highlighted one:

This brings up the developer inspection window where we can inspect the HTML element for the byline: New York Times element in developer console Hover over the author’s byline and right-click to bring up the menu and click "Inspect Element" as shown in the following screenshot: New York Times inspect element selection But first we need to see how the New York Times labels the author on the webpage, so we can then create a formula to use going forward. Note – I know what you’re thinking, wasn’t this supposed to be automated?!? Yes, and it is. Navigate to the website, in this example the New York Times: New York Times screenshot Let’s take a random New York Times article and copy the URL into our spreadsheet, in cell A1: Example New York Times URL Here # we the title is given by the text inside title % html_element ( "h2" ) %>% html_text2 ( ) title #> "The Phantom Menace" "Attack of the Clones" #> "Revenge of the Sith" "A New Hope" #> "The Empire Strikes Back" "Return of the Jedi" #> "The Force Awakens" # Or use html_attr() to get data out of attributes.Grab the solution file for this tutorial:įor the purposes of this post, I’m going to demonstrate the technique using posts from the New York Times. # Then use html_element() to extract one element per film. #> \nThe Force Awakens\n\n\nReleased: 2015. #> \nReturn of the Jedi\n\n\nReleased: 1983. #> \nThe Empire Strikes Back\n\n\nReleased. #> \nRevenge of the Sith\n\n\nReleased: 200. #> \nAttack of the Clones\n\n\nReleased: 20. Library ( rvest ) # Start by reading a HTML page with read_html(): starwars corresponds # to a different film films % html_elements ( "section" ) films #> #> \nThe Phantom Menace\n\n\nReleased: 1999.

0 kommentar(er)

0 kommentar(er)